Key Takeaways

- Anthropic’s guide emphasizes simplicity and transparency as crucial principles for building reliable AI agents.

- Effective agents are built on a core Large Language Model (LLM) enhanced with carefully chosen Retrieval, Tools, and Memory.

- It’s important to distinguish between predefined Workflows and dynamically adapting Agents, choosing the right approach for the task.

- Design patterns like Prompt Chaining (breaking tasks down with checks) and Evaluation Patterns (self-assessment cycles) improve agent reliability and performance.

- Agents are already showing value in customer support (performing actions beyond chat) and coding assistance (autonomous problem-solving with human oversight).

- The guide is praised for its practicality, but discussions continue around definitions and the role of development frameworks.

- Building adaptable systems designed to leverage future AI model improvements is key for long-term success.

Get ready, tech explorers! The world of Artificial Intelligence (AI) is buzzing louder than ever, and one of the most exciting developments is the rise of AI agents. Imagine smart helpers that don’t just answer questions but can actually do things for you – book appointments, manage your emails, or even help write computer code! It sounds like science fiction, but it’s becoming reality. Leading the charge is the AI safety and research company, Anthropic. They’ve just released a fantastic guide, shining a light on the best ways for Anthropic building effective agents. This isn’t just another technical manual; it’s a treasure map for developers wanting to create AI agents that are not only powerful but also reliable and trustworthy.

Dive in with us as we unpack the wisdom shared in Anthropic’s guide. We’ll explore the core ideas, the building blocks, and the clever patterns that make these agents tick. From helping customers to writing code, we’ll see how these agents are already making a difference. We’ll also look at what the experts think and what the future might hold for these incredible AI helpers. If you’re curious about how AI is learning to take action, you’re in the right place!

Keep it Simple, Keep it Clear: The Heart of Anthropic’s Agent Philosophy

When building something as complex as an AI agent, you might think the more complicated, the better. But Anthropic turns that idea on its head! Their guide shouts one message loud and clear: simplicity is key. Why? Think about building with LEGOs. If you use too many different kinds of complicated pieces, your creation might become wobbly and hard to fix if it breaks. It’s the same with AI agents. Keeping the design simple makes the agent easier to understand, easier to test, and much more reliable. Complex agents can behave in unexpected ways, making them harder to trust. Anthropic urges developers to resist adding unnecessary bells and whistles and focus on a clean, straightforward design.

Alongside simplicity comes another crucial principle: transparency. Imagine your AI agent is planning a surprise party for a friend. Wouldn’t you want to peek at the plan to make sure it’s getting the right cake and inviting the right people? Transparency in AI agents means making their thinking process visible. The guide highlights the importance of designing agents that clearly show their planning steps. When you can see how an agent decides what to do next, it builds trust. You can understand its reasoning, spot potential problems early, and feel more confident letting it handle tasks. This openness is vital for creating agents we can comfortably rely on.

The Building Blocks: What Makes an Agent Tick?

So, what are these agents actually made of? At the core, Anthropic explains, is a souped-up Large Language Model (LLM). Think of an LLM like ChatGPT or Anthropic’s own Claude – AI that’s great at understanding and generating text. But an agent needs more than just language skills. It needs superpowers!

Anthropic identifies several key enhancements that turn a basic LLM into the foundation of an effective agent:

- Retrieval: This gives the agent the ability to look up information. Just like you might search the web or look through your notes, a retrieval-augmented agent can access vast amounts of data – product manuals, databases, articles – to find the facts it needs to complete a task accurately.

- Tools: This is where agents get really exciting! Tools allow agents to interact with the outside world or other software. This could be anything from sending an email, booking a calendar slot, processing a payment, or even running code. Giving an LLM access to tools transforms it from a conversationalist into an actor.

- Memory: Humans rely on memory to learn and perform tasks over time. Agents need memory too! This allows them to remember past interactions, user preferences, or the steps they’ve already taken in a multi-step process. Memory helps agents provide more personalized and consistent help.

Crucially, the guide stresses that these capabilities shouldn’t be generic. They need to be carefully chosen and tailored to the specific job the agent is designed for. An agent helping with customer support needs different tools and information than one designed to write software. Furthermore, how the agent uses these building blocks needs a well-documented interface. This is like a clear instruction manual explaining exactly what tools the agent has and how they work, making the agent more predictable and easier to manage.

Workflows vs. Agents: Knowing the Difference

The terms “workflow” and “agent” are sometimes used interchangeably, but Anthropic makes a clear distinction, and it’s important for understanding how to build effectively.

- Workflows: Think of these as following a strict recipe. A workflow is a system where the steps are largely predefined. It uses LLMs and tools, but the path is mostly set in advance. For example, a workflow might automatically read incoming emails, use an LLM to categorize them (e.g., “Urgent,” “Invoice,” “Spam”), and then use a tool to move them into the correct folder. The steps are fixed: read, categorize, move.

- Agents: These are more like skilled chefs who can improvise. An agent is a system where the LLM itself dynamically figures out the process. It decides which tools to use, in what order, based on the goal and the situation. It can adapt its plan on the fly. For instance, you might ask an agent to “plan a weekend trip to the mountains.” The agent would need to decide to search for weather forecasts, look up cabin rentals, check driving routes, potentially book activities, and present you with options – all based on its understanding of your request, without a rigid, pre-written script for that specific task.

Understanding this difference helps developers choose the right approach. Sometimes a predictable, step-by-step workflow is best. Other times, the flexibility and adaptability of an agent are needed to tackle more complex or open-ended problems. Anthropic’s guide helps developers recognize when to use each approach, preventing them from building overly complex agents when a simpler workflow would suffice, or vice-versa.

Smart Strategies: Effective Patterns for Agent Design

Building an agent isn’t just about plugging in tools; it’s about designing how the agent thinks and acts. Anthropic highlights a couple of powerful patterns:

- Prompt Chaining: Imagine trying to solve a big math problem. You wouldn’t do it all in one go; you’d break it down into smaller steps. Prompt chaining applies this logic to AI agents. Instead of giving the agent one massive instruction and hoping for the best, the task is broken down into a series of smaller LLM calls (prompts). The output of one step feeds into the next. Importantly, there are intermediate checks along the way. This could involve the LLM checking its own work, using a tool to verify information, or even asking a human for clarification. This step-by-step approach with checks makes the agent much more reliable and less likely to make big mistakes. It’s like building a safety net into the agent’s thinking process.

- Evaluation Patterns: How does an agent know if it’s doing a good job? Evaluation patterns help agents assess and improve their own work. This often involves a cycle:

- Generate: The agent produces an output (e.g., a piece of code, a summary, a plan).

- Evaluate: The agent (or another process) assesses the quality of that output against certain criteria. Did the code run? Is the summary accurate? Does the plan meet all requirements?

- Iterate: Based on the evaluation, the agent refines its output. If the code had bugs, it tries to fix them. If the summary missed key points, it rewrites it. This loop allows the agent to learn and improve, getting closer to the desired outcome with each cycle.

These patterns provide structured ways to guide the agent’s behaviour, making them more methodical, self-correcting, and ultimately, more effective.

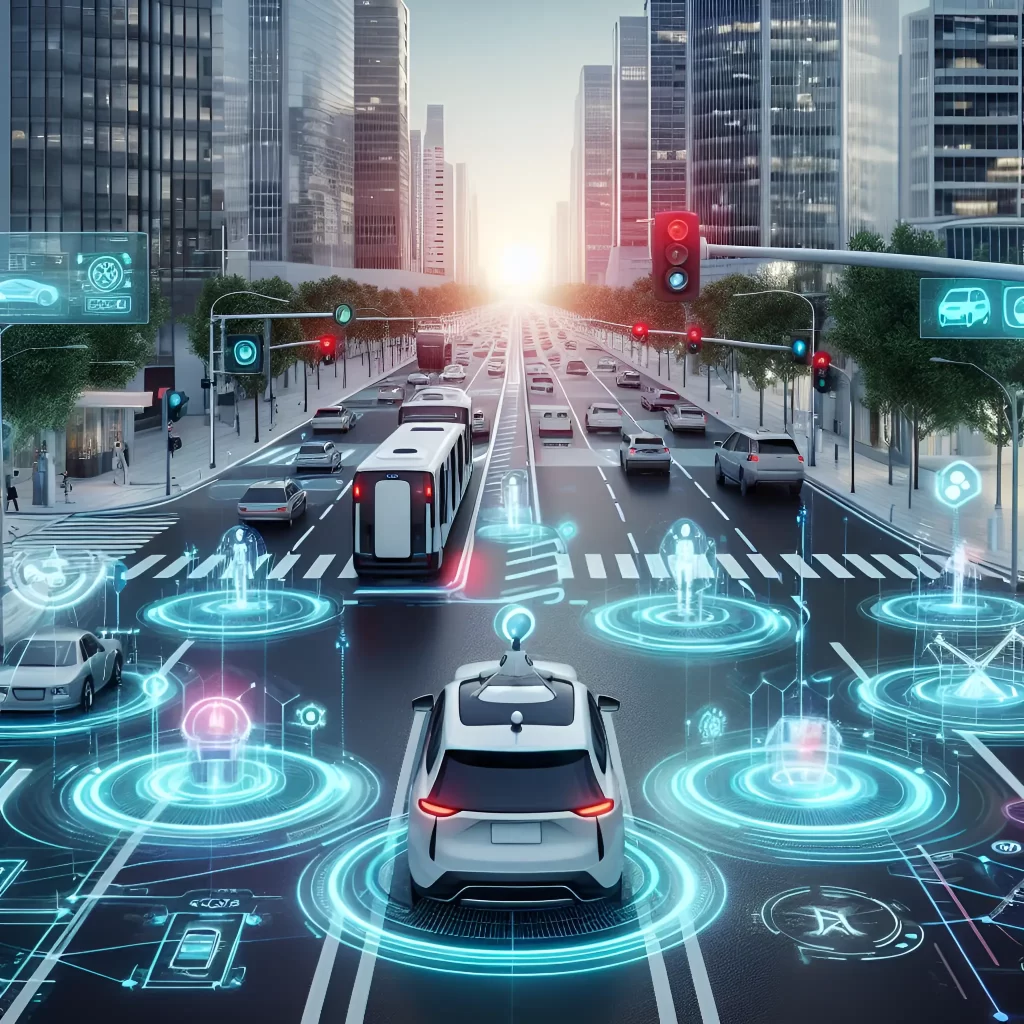

Agents in the Wild: Amazing Real-World Applications

Okay, the theory is cool, but where are these agents actually making a difference? Anthropic points to two key areas where agentic systems are already proving their worth:

1. Supercharged Customer Support:

We’ve all interacted with chatbots, but agents take customer support to a whole new level. By integrating chatbot interfaces with powerful tool integration, agents can do much more than just answer frequently asked questions. Imagine a customer chatting with an AI assistant about a faulty product. Instead of just providing troubleshooting steps, an agent equipped with the right tools could:

- Access the customer’s order history.

- Check the warranty status.

- Guide the customer through specific diagnostic steps using its knowledge base (retrieval).

- If necessary, programmatically issue a refund or arrange for a replacement shipment using a tool connected to the company’s backend systems.

This is a huge leap! The agent isn’t just talking; it’s acting. Furthermore, Anthropic notes that success can be measured more effectively, for example, by checking if the agent successfully performed the action the user wanted (like confirming the refund was processed), leading to truly user-defined resolutions. This creates a faster, more efficient, and often more satisfying experience for the customer.

2. Intelligent Coding Assistants:

Writing computer code can be complex and time-consuming. AI agents are emerging as valuable partners for software developers. These coding agents are particularly useful for autonomous problem-solving. A developer could describe a problem or a desired feature, and the agent could:

- Generate initial code attempts.

- Use tools to run the code and execute tests.

- Analyze the test results (evaluation).

- Iterate on the solution, fixing bugs and refining the code based on the test feedback.

This ability to try, test, and learn makes coding agents powerful assistants, speeding up development cycles. However, Anthropic wisely adds a note of caution: human review remains crucial. While an agent might write code that passes tests, a human developer still needs to ensure the code fits correctly within the larger system, follows best practices, and meets the overall project goals. It’s a collaboration, with the agent handling much of the iterative grunt work.

The Buzz Around the Guide: What Are People Saying?

Anthropic’s guide hasn’t just dropped into a void; it’s landed right in the middle of intense industry interest in AI agents. So, what’s the verdict?

Generally, the guide has been well-received for its practical, down-to-earth advice (Source). Many developers and businesses appreciate the focus on simplicity and reliability in a field that can sometimes get carried away with hype. It provides a clear roadmap for building useful systems now.

However, there’s also constructive discussion. Some experts feel that the definitions of “agentic” systems and “agents” could be sharpened further to ensure everyone is speaking the same language (Source). Clear definitions are important for consistent development and research.

Another point of discussion is the use of frameworks – pre-built software libraries designed to make agent development easier. While Anthropic’s guide advises caution against over-reliance on frameworks (preferring a deep understanding of the core principles), others argue that frameworks can be very useful accelerators, especially if developers understand how they work underneath (Source). The key seems to be finding a balance: use frameworks wisely, but don’t let them become a black box that hides the fundamental workings of the agent.

Looking Ahead: The Future of Agent Development

Anthropic’s guide is seen by many as a refreshing and pragmatic take in the rapidly evolving field of AI agent design (Source). Businesses are increasingly excited about the potential of agents to automate tasks, freeing up human workers for more creative or strategic activities (Source).

However, it’s also important to keep expectations realistic. Some aspects of agent capabilities might be overhyped right now (Source). The dream of agents performing highly complex, nuanced tasks completely autonomously without any significant human intervention or oversight is likely still some way off. Building truly robust and adaptable agents for complex, open-ended real-world scenarios remains a significant challenge.

Perhaps one of the most crucial pieces of advice for the future comes directly from the discussions surrounding the guide: developers should focus on building systems that are designed to improve as the underlying AI models get better (Source). AI technology is advancing at lightning speed. An agent built today might be based on an LLM that will be surpassed by a much more capable model in six months or a year. Therefore, the goal shouldn’t be to build a perfect, fixed solution for today’s models. Instead, developers should architect their agent systems in a modular way, designing them to easily leverage future model improvements to enhance the agent’s overall performance without requiring a complete rebuild (Source). Build for adaptability, not obsolescence.

Want to Learn More? Dive Deeper!

Feeling inspired to learn more about building these amazing AI helpers? Anthropic has made their full insights available:

- Read Anthropic’s Full Guide: Get all the details straight from the source: https://www.anthropic.com/research/building-effective-agents

- Watch Expert Discussions: Explore different perspectives and analyses of the guide on YouTube: Check out video discussions here (Source) and here (Source).

Conclusion: Building the Future, Thoughtfully

Anthropic’s guide on building effective agents is a landmark contribution to the field. By championing simplicity, transparency, and careful design, it provides invaluable practical wisdom for anyone looking to create AI systems that can reliably take action in the world. From understanding the core building blocks like retrieval, tools, and memory, to applying clever patterns like prompt chaining and evaluation, the guide offers a clear path forward.

We’ve seen how these principles translate into powerful applications in customer support and coding, delivering real value today. While the community actively discusses nuances and definitions, the core message resonates: build thoughtfully, focus on reliability, and design for the future. The journey of Anthropic building effective agents is not just about creating smarter tools; it’s about building a future where AI assistants are trustworthy partners in our daily lives and work. The potential is immense, and with guides like this, developers are better equipped than ever to unlock it responsibly.

Frequently Asked Questions

What is the main message of Anthropic’s guide on building agents?

The core message is to prioritize simplicity, transparency, and reliability when designing AI agents. Avoid unnecessary complexity and ensure the agent’s actions and reasoning are as clear as possible.

What are the key components of an Anthropic-style agent?

An effective agent starts with a powerful Large Language Model (LLM) and enhances it with carefully selected and tailored capabilities: Retrieval (accessing external knowledge), Tools (interacting with software/the world), and Memory (remembering context and past interactions).

What’s the difference between a workflow and an agent according to Anthropic?

A workflow follows a largely predefined sequence of steps, using LLMs and tools along a fixed path. An agent uses the LLM to dynamically determine the sequence of actions and tool usage based on the goal and context, allowing for more flexibility and adaptability.

What are some effective design patterns mentioned?

Two key patterns are highlighted: Prompt Chaining, which breaks down complex tasks into smaller steps with intermediate checks for reliability, and Evaluation Patterns, which involve cycles of generating output, evaluating its quality, and iterating to improve.

Where are AI agents being used effectively now?

Anthropic points to two strong examples: Supercharged customer support, where agents can take actions like processing refunds or checking order status, and intelligent coding assistance, where agents help developers by generating, testing, and refining code, though human oversight remains vital.