Key Takeaways

- AI safety measures are essential for ensuring artificial intelligence operates ethically and aligns with human values.

- Uncontrolled AI poses significant risks, including ethical breaches and unintended societal impacts.

- Government regulations and AI laws play a crucial role in managing these risks.

- Implementing effective AI safety protocols is key to the future of AI technology.

Introduction

AI safety measures are gaining immense significance as technology advances rapidly. These measures play a crucial role in ensuring that artificial intelligence operates safely and ethically, aligning with human values. As interest in AI continues to expand, understanding how governments regulate AI and exploring AI laws and compliance becomes essential. This post will delve into these areas, offering insights into the current state of AI safety and regulations.

Understanding AI Safety Measures

Defining AI Safety Measures

AI safety measures are protocols and practices designed to ensure that artificial intelligence systems operate safely and ethically. Their primary objective is to prevent unintended consequences and ensure alignment with human values. These measures help mitigate risks associated with the deployment of AI systems. Effective AI safety measures necessitate thoughtful risk assessment and consistent monitoring to prevent the risks of uncontrolled AI deployment.

For a foundational definition and understanding of AI safety, OpenAI offers key insights, and the IEEE Standards Association provides guidelines on best practices. Additionally, refer to What is AI? A Beginner’s Guide for more details.

The Risks of Uncontrolled AI

The potential dangers of AI operating without proper safety measures include ethical breaches, loss of control, and unintended societal impacts. Without careful regulation, AI can lead to scenarios such as autonomous weapon deployment or biased decision-making systems impacting everyday life.

Various industries, like healthcare, finance, and transportation, are susceptible to these AI risks. The Future of Life Institute highlights these dangers, and MIT Technology Review provides real-world examples of AI failures, emphasizing the necessity of tightly regulating AI technologies to avert problematic outcomes. For more on ethical implications, see AI Ethics Explained: Navigating Bias, Privacy, and the Future of Work.

Can AI Become Dangerous?

The question of whether AI can become dangerous sparks ongoing debate among experts. Some argue that AI poses existential threats to humanity, while others believe that with proper regulation, AI’s benefits outweigh its risks. Historical cases such as Microsoft’s Tay chatbot incident illustrate the importance of monitoring AI systems closely to prevent misuse or unpredictability.

Elon Musk is vocal about AI’s potential threats, as detailed on Tesla’s blog. Meanwhile, Stanford’s AI Index provides case studies highlighting key AI risk incidents, offering insights into lessons learned from past events. Additionally, understanding the differences between AI and human intelligence can provide further perspective, as discussed in AI vs. Human Intelligence: What’s the Difference?.

How Governments Regulate AI

Government involvement in AI regulation varies globally, with different regions adopting distinct approaches. Key regulatory bodies include:

- European Union’s AI Act: EU AI Act establishes comprehensive rules for AI deployment across industries.

- United States’ National AI Initiative: Details the strategic approach taken by the U.S. government, viewed on the White House website.

- China’s AI Policies: China’s New Generation AI Development Plan showcases an aggressive stance towards AI regulation and technology evolution.

These frameworks introduce varying levels of effectiveness and stringency in AI regulation, reflecting each region’s autonomous development priorities.

AI Laws and Compliance

Specific AI laws and compliance requirements guide organizations in ensuring their AI systems comply with ethical and legal standards. Important aspects include data protection, transparency, and accountability. Non-compliance with these laws can result in fines, sanctions, and reputational damage.

The General Data Protection Regulation (GDPR) significantly impacts AI by enforcing strict requirements for data handling. Furthermore, The Center for AI and Digital Policy offers strategic insights for businesses to ensure regulatory compliance and ethical AI deployment. Additional insights on how AI impacts the workforce can be found in How will AI and jobs change in the future?.

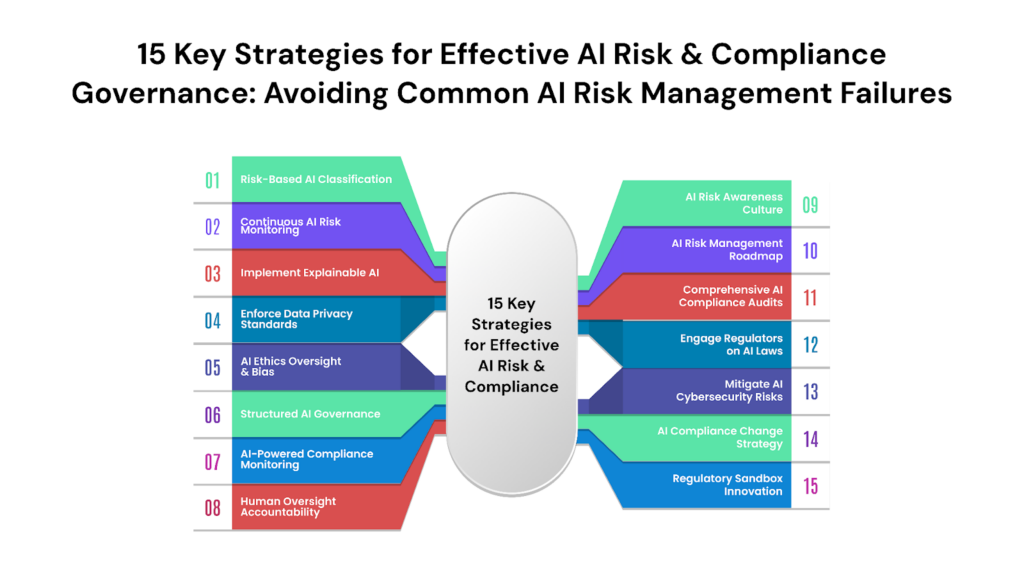

Implementing Effective AI Safety Measures

Integrating AI safety measures within the AI development lifecycle is critical for ensuring long-term system integrity and reliability. Best practices include conducting thorough risk assessments, testing frameworks, and constant monitoring of AI systems. Key ethical considerations, such as fairness, accountability, and transparency, should be embedded into AI design and operation.

Notable examples include Microsoft’s Responsible AI initiatives and Google AI’s Responsible AI Practices, both of which demonstrate how organizations can successfully implement safety protocols.

The Future of AI Regulation

Future trends in AI regulation point towards increased international collaboration and the development of universal standards. As AI technologies evolve, the potential need for establishing global standards to address their international impact becomes imperative.

Emerging AI safety methodologies, like explainable AI and robust AI systems, offer promising pathways for safer AI implementations. The OECD’s AI Principles outline potential influences on future regulatory frameworks, while perspectives from the World Economic Forum offer insights into the anticipated landscape of AI governance.

Conclusion

AI safety measures are a cornerstone for ensuring the safe and ethical development of AI technologies. Robust regulation, aligning governmental policies globally and ensuring compliance, is key to controlling AI systems’ deployment risks. Developers, regulatory bodies, and organizations play a collective role in advancing AI safety and compliance, and continuous improvement is necessary to address emerging AI challenges.

FAQ

What are AI safety measures?

AI safety measures are protocols and practices designed to ensure that artificial intelligence systems operate safely and ethically, preventing unintended consequences and aligning with human values.

Why is regulating AI important?

Regulating AI is crucial to mitigate risks such as ethical breaches, loss of control, and unintended societal impacts, ensuring that AI technologies are deployed responsibly.

How do different governments approach AI regulation?

Government approaches to AI regulation vary globally, with regions like the European Union, United States, and China adopting distinct frameworks and strategies to manage AI deployment and development.

What are the consequences of non-compliance with AI laws?

Non-compliance with AI laws can result in fines, sanctions, and reputational damage, making it essential for organizations to adhere to ethical and legal standards in their AI implementations.