Key Takeaways

- It’s a digital shield! The “too many concurrent requests” message is not a bad thing. It’s actually a very smart protective measure. It helps stop the powerful AI servers from getting completely overloaded, which keeps them stable and working for everyone.

- It can be you, or it can be the server! This error can pop up because of how you’re using the AI (like sending too many messages too fast, or using special automated programs), or it can happen because the AI’s servers are just super busy with millions of other users.

- You have the power to help! There are many clever workarounds and solutions you can try to get back to your AI conversation. From simply waiting a little bit to checking for updates or clearing your browser, you have tools in your hand to tackle this problem.

- Help is on the way! The amazing people who create these AIs are aware of the problem and are actively working on making things better. Recent reports even suggest that a fix is being rolled out, promising a smoother AI experience for everyone.

Imagine a world where powerful artificial intelligence, or AI, helps us learn, create, and explore new ideas every single day! Tools like ChatGPT have opened up amazing new ways for us to talk to computers and get answers to our burning questions. But sometimes, even in this exciting AI adventure, we hit a little roadblock. Have you ever been chatting with an AI friend, asking it questions, and suddenly you see a puzzling message pop up: “too many concurrent requests”? It’s like the AI suddenly put up a “Do Not Disturb” sign!

This week, we’re diving deep into this mysterious message, ”too many concurrent requests”, to understand what it means, why it happens, and what we can do about it. Get ready to uncover the secrets behind this error that can sometimes put a pause on your AI conversations!

What in the World Does “Too Many Concurrent Requests” Even Mean?

Let’s start our investigation by understanding the core of the mystery. When you see “too many concurrent requests,” it simply means that the digital brain of the AI system, or the server that runs it, is feeling a bit overwhelmed. Think of it like a very popular ice cream shop. If everyone in town suddenly decided they wanted ice cream at the exact same moment, the poor ice cream scooper wouldn’t be able to keep up! The shop would get super busy, and they might have to ask some people to wait outside so things don’t get too messy.

In the world of computers and AI, a “request” is like asking a question or giving a command to the AI. “Concurrent requests” means many, many questions or commands are being sent to the AI at the same time. When the server gets “too many concurrent requests,” it’s overloaded with more questions than it can handle all at once. This isn’t a problem, it’s actually a very smart safety rule! It’s like a superhero shield for the server, designed to keep the entire system from crashing or slowing down for *everyone* using it. This clever protective measure helps make sure the service stays stable and works well for all its users, all its users.

The Secret Reasons Behind the Overload!

So, why does this digital traffic jam happen? Our research has uncovered a few key culprits:

- Everyone Wants a Piece of the AI Action! (Excessive User Activity): Imagine a huge school playground. If every single kid tries to go down the same slide at the exact same moment, there’s going to be a pile-up! Similarly, when tons and tons of people around the world are trying to use services like ChatGPT all at the very same time, the server might get overwhelmed. It’s simply too many people asking too many questions all at once for the system to process smoothly.

- The Need for Speed… But Not *Too* Much Speed (High Request Frequency): Sometimes, it’s not about how many people are using the AI, but how *fast* one person or a few people are sending messages. If you’re typing super fast and hitting ‘enter’ before the AI even has a chance to think, or if someone is using a special computer program to send messages automatically very quickly, it can feel like a flood to the server. This rapid-fire questioning can overload the system from a single source.

- Oops! A Digital Bump in the Road (Technical Bottlenecks): Sometimes, it’s not even about too many users or fast messages. Just like a road might have a small detour or a sudden construction zone, the digital “roads” that connect you to the AI can have temporary issues. There might be a small technical glitch, or a sudden, unexpected burst of data traffic that temporarily blocks the server’s ability to handle requests. These “bottlenecks” can cause the server to slow down or even pause, even if it’s not usually that busy.

- Playing by the Rules (Rate Limiting Exceeded): To keep things fair and running smoothly for everyone, AI services often have “rate limits.” Think of these as rules, like how many turns you can have on a swing set in a certain amount of time. These rules are put in place to stop one person or a few people from using up all the AI’s “brainpower” and leaving none for others. If a user sends too many requests in a short period, they might bump into these limits. When you hit your “rate limit,” the system says, “Hold on, you’ve asked enough questions for now, please wait a bit!” This is usually to prevent abuse or overuse of the service limits.

The Secret Code: Understanding HTTP Status Code 429

Did you know computers talk to each other using secret codes? One of these codes is like a special message that tells you exactly why something isn’t working. When you see “too many concurrent requests,” it’s often linked to a special technical message called HTTP status code 429 Too Many Requests.

This code, “429,” is like a polite but firm notice from the server saying, “I’ve received too many requests from you in a short amount of time, and I need you to slow down for a bit!” It’s a standard way for web servers to communicate that someone has been a bit too eager with their requests. The server then temporarily stops accepting more requests from that source to keep everything stable and prevent a bigger problem. So, if you ever see “429” alongside “too many concurrent requests,” you’ll know exactly what the computer is trying to tell you!

How This Mystery Affects YOU!

It’s one thing to understand the technical side, but what does “too many concurrent requests” actually feel like for you, the user? Our investigation shows it can be quite frustrating!

For people using ChatGPT, it’s been a real head-scratcher. Many users have reported seeing this error even when they’re just having a single, normal conversation with the AI on one device. They’re not sending a million messages or using multiple computers at once, yet the error still pops up. This suggests that sometimes, it’s not just about what *you* are doing, but more about the overall “busyness” of the server or other technical issues happening behind the scenes.

When this error strikes, your exciting AI conversation can come to a screeching halt. You might experience annoying interruptions in the service, find yourself unable to send messages, or notice that the AI takes an incredibly long time to respond, if it responds at all, at all. It’s like having a wonderful chat with a friend, and then they suddenly freeze mid-sentence!

Your Secret Agent Toolkit: Solutions and Workarounds!

Don’t worry, brave explorers of the AI world! Just because you hit this roadblock doesn’t mean your adventure is over. There are many clever tricks and strategies you can use to try and solve or avoid the “too many concurrent requests” mystery. Let’s look at your special toolkit:

- Wait and Retry (The Patience Potion): Imagine you’re waiting for your turn on a popular playground slide. If too many kids rush at once, it gets jammed. So, you wait a minute, take a breath, and try again. That’s often all it takes when you see “too many concurrent requests” pop up on your screen. The server, like the playground, just needs a moment to clear up the rush. Sometimes, just a few short minutes can make all the difference, letting the digital traffic ease up and allowing you to reconnect smoothly smoothly.

- Check the AI’s “Status Page” (The Weather Report): Just like you might check the weather before going outside, you can check the “service status” page for the AI platform you’re using (like OpenAI’s status page for ChatGPT). This page is like a special bulletin board where the AI’s creators tell you if there are any known problems or “outages” happening. If there’s a big problem they’re working on, you’ll know it’s not just you! you!

- Slow Down Your Inputs (The Gentle Whisper): Remember the “high request frequency” problem? This solution tackles it! Instead of quickly sending many messages one after another, try to take your time. Send one message, wait for the AI to respond, and then send your next one. Think of it like a polite conversation, not a shouting match! This gives the server enough time to process each of your questions properly properly.

- Close Extra Tabs and Sessions (The Tidy Workspace): Are you talking to ChatGPT in more than one browser tab or on different devices at the same time? Each of those conversations counts as a “request” to the server. If you have too many open, you might be sending more requests than you realize, triggering the error. Try closing any extra tabs or making sure you’re only chatting in one place at a time time.

- Try a Different Device or Browser (The New Path): Sometimes, the problem isn’t with the AI itself, but with your specific device or the internet browser you’re using. If you’re on a computer, try switching to a phone or tablet. If you’re using one browser (like Chrome), try another (like Firefox or Edge). This can sometimes “reset” your connection and help you get back to talking to the AI AI.

- Clear Cache and Cookies (The Digital Cleanup): Your web browser stores little bits of information (called “cache” and “cookies”) to help websites load faster. But sometimes, these stored bits can get old or mixed up and cause problems. Clearing them out is like tidying up your digital space. It can help resolve weird glitches and make sure your connection to the AI is fresh and clean clean.

- Use a VPN or Change Networks (The Secret Tunnel): Sometimes, your internet service provider or even your school/work network might have its own rules about how much data you can send. A Virtual Private Network (VPN) can sometimes help by making it seem like your internet requests are coming from a different place. Or, if you’re on Wi-Fi, try switching to your phone’s mobile data, or vice versa. This can sometimes bypass network restrictions that might be causing the issue issue.

- Break Down Large Tasks (The Bite-Sized Approach): If you’re trying to send a very long story, a huge list of questions, or a really complex request to the AI, it might be too much for it to handle all at once. Try breaking your big question into smaller, more manageable parts. Send one part, wait for a response, then send the next. This makes it easier for the AI to process your request without getting overloaded overloaded.

- Reduce Request Frequency (The Steady Pace): This is similar to slowing down inputs but also applies if you’re using special computer programs (like coding scripts) to talk to the AI very often. If you have any automated tools sending requests, make sure they aren’t sending them too quickly. Giving the AI a steady, gentle flow of requests is much better than a sudden burst burst.

- Upgrade Your Plan (The VIP Pass): For some AI services, if you’re using a free version, you might have stricter “rate limits” than someone who pays for a premium plan. Think of it like getting a VIP pass at an amusement park – you might get to go on more rides, more often. If you find yourself hitting these limits frequently and you use the AI a lot, upgrading your plan might give you higher limits and fewer interruptions interruptions.

- Contact Support (Call for Backup!): If you’ve tried all these tricks and you’re still stuck, it’s time to call in the experts! The people who made the AI service have special teams to help users with problems. Don’t hesitate to reach out to their support team. They can look at what’s happening on their end and give you personalized advice or solutions solutions.

Breaking News! The AI Community Responds!

Our thrilling journey to understand “too many concurrent requests” takes us to the forefront of AI development! The world of AI is always evolving, with new, even smarter models being created all the time. Recently, exciting new AI models like GPT-4o from OpenAI have been introduced, bringing incredible new powers to our digital friends.

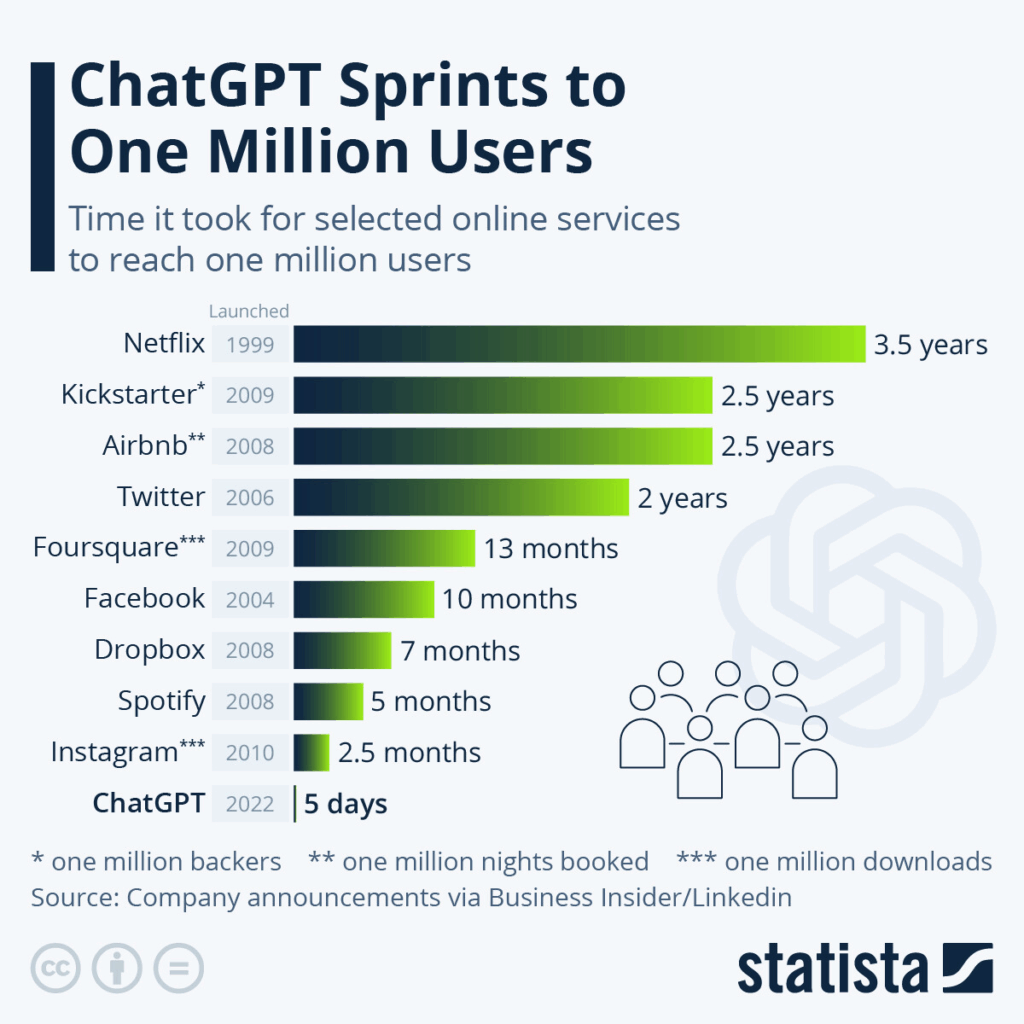

However, with great power can come new challenges! Users have been reporting that since these new, super-smart models came out, they’ve been seeing the “too many concurrent requests” error pop up even more often. This is happening even when people aren’t doing anything that seems “abusive” or sending messages super fast. It’s like the new, super-smart AI is so popular, it’s getting even *more* busy!

The AI community, which is made up of all the wonderful people who use and develop AI, has certainly expressed some frustration about this. It can be annoying when your powerful AI friend suddenly can’t talk to you! But there’s good news too: the brilliant developers behind these AIs are very much aware of the problem and are working super hard to fix it.

In fact, the latest reports are thrilling! As of May 2025, OpenAI, the creators of ChatGPT, have even stated that they’ve figured out the main reason this problem is happening. They’re now busy putting a special “fix” into place! This is fantastic news for everyone who loves talking to their AI companions, as it means smoother, less interrupted conversations are on the horizon.

The Big Takeaways: What We’ve Learned!

Our exciting investigation into “too many concurrent requests” has taught us a lot! Here are the most important things to remember from our quest:

- It’s a digital shield! The “too many concurrent requests” message is not a bad thing. It’s actually a very smart protective measure. It helps stop the powerful AI servers from getting completely overloaded, which keeps them stable and working for everyone, everyone. Without it, our AI friends might crash completely!

- It can be you, or it can be the server! This error can pop up because of how you’re using the AI (like sending too many messages too fast, or using special automated programs), or it can happen because the AI’s servers are just super busy with millions of other users, other users.

- You have the power to help! There are many clever workarounds and solutions you can try to get back to your AI conversation. From simply waiting a little bit to checking for updates or clearing your browser, you have tools in your hand to tackle this problem.

- Help is on the way! The amazing people who create these AIs are aware of the problem and are actively working on making things better. Recent reports even suggest that a fix is being rolled out, promising a smoother AI experience for everyone!

So, the next time you encounter the “too many concurrent requests” message, don’t despair! You’ll know exactly what’s happening behind the scenes, and you’ll have a whole arsenal of tips and tricks to get your AI adventures back on track. The world of AI is still growing and learning, and sometimes, even our smartest digital friends need a little break or a helping hand to keep everything running perfectly. Keep exploring, keep questioning, and keep having fun with AI!

Frequently Asked Questions

What does “too many concurrent requests” mean?

It means the AI system’s server is overwhelmed by too many questions or commands sent at the same time, acting as a safety measure to prevent crashes and ensure stability.

Why does this error happen?

It can be due to excessive user activity (many people using it at once), high request frequency from a single user, temporary technical bottlenecks, or exceeding rate limits set by the service.

What is HTTP status code 429?

This is a standard code from the server indicating that you have sent too many requests in a given amount of time and should slow down. It’s often linked to the “too many concurrent requests” message.

What can I do when I see this error?

You can try waiting and retrying, checking the AI’s status page, slowing down your inputs, closing extra tabs, trying a different device/browser, clearing cache/cookies, using a VPN, breaking down large tasks, reducing request frequency, upgrading your plan, or contacting support.

Are AI developers aware of this issue?

Yes, particularly OpenAI, creators of ChatGPT, have acknowledged the issue and stated they’ve identified the root cause and are rolling out a fix, especially since the introduction of newer models like GPT-4o.